Artificial intelligence (AI) and machine learning (ML) have taken off in financial services as computing and data storage resources have become cheaper over time. Banks and financial institutions (FIs) are integrating these technologies to reimagine an expanding set of business processes. FI CIOs and CTOs should adopt a comprehensive design and oversight approach that promotes the explainability and transparency of these AI-driven processes in adhering to regulatory principles like non-discriminatory outcomes.

Artificial Intelligence Has Taken Off in Financial Services

AI and ML are increasingly leveraged by FIs to reinvent internal and customer-facing processes, leading to efficiency gains and improved service outcomes. These advanced technologies are being deployed across a range of use cases including automated investment advice, customer service chatbots, and anti-money laundering analytics.

With growing demand for AI/ML-based use cases in delivering on the promise of business transformation, FI IT leaders should explicitly address information capture and transparency regarding the design and use of AI-based processes and models in achieving strategic IT objectives.

The main consideration for CIOs and CTOs is that AI/ML-based algorithms should be built to adhere to the guiding principles, like fair and non-discriminatory outcomes and transparent decisions, enabling required regulatory reporting.

IT Architecture Must Be Designed to Promote Transparency of AI-Driven Processes

As I write in my latest report, CIO/CTO Checklist: AI and ML: Explaining Algorithm Outcomes to Regulators, “The FI’s enterprise and solution architecture must be designed to support [AI] and [ML] algorithms in a way that provides regulators the information they need to confirm alignment with guiding principles for these technologies.”

This means that FIs must be able to explain the way that AI-driven outcomes are generated to regulators, customers, and potential customers. To build transparency into how AI/ML is leveraged, basic principles of AI use like fairness and lack of discrimination must be designed into solutions/enterprise architecture.

By doing so, FIs will mitigate the risks (e.g., lawsuits, reputational damage) of the typical “black box” phenomenon, where the decisions and recommendations from the algorithms are difficult if not impossible to understand based on the inputs given to the algorithms.

FI CIOs and CTOs should embrace partnering with business leaders to adopt practices that support explainability as part of a comprehensive design approach.

Best Practices for CIOs/CTOs in Leveraging and Explaining AI Algorithms

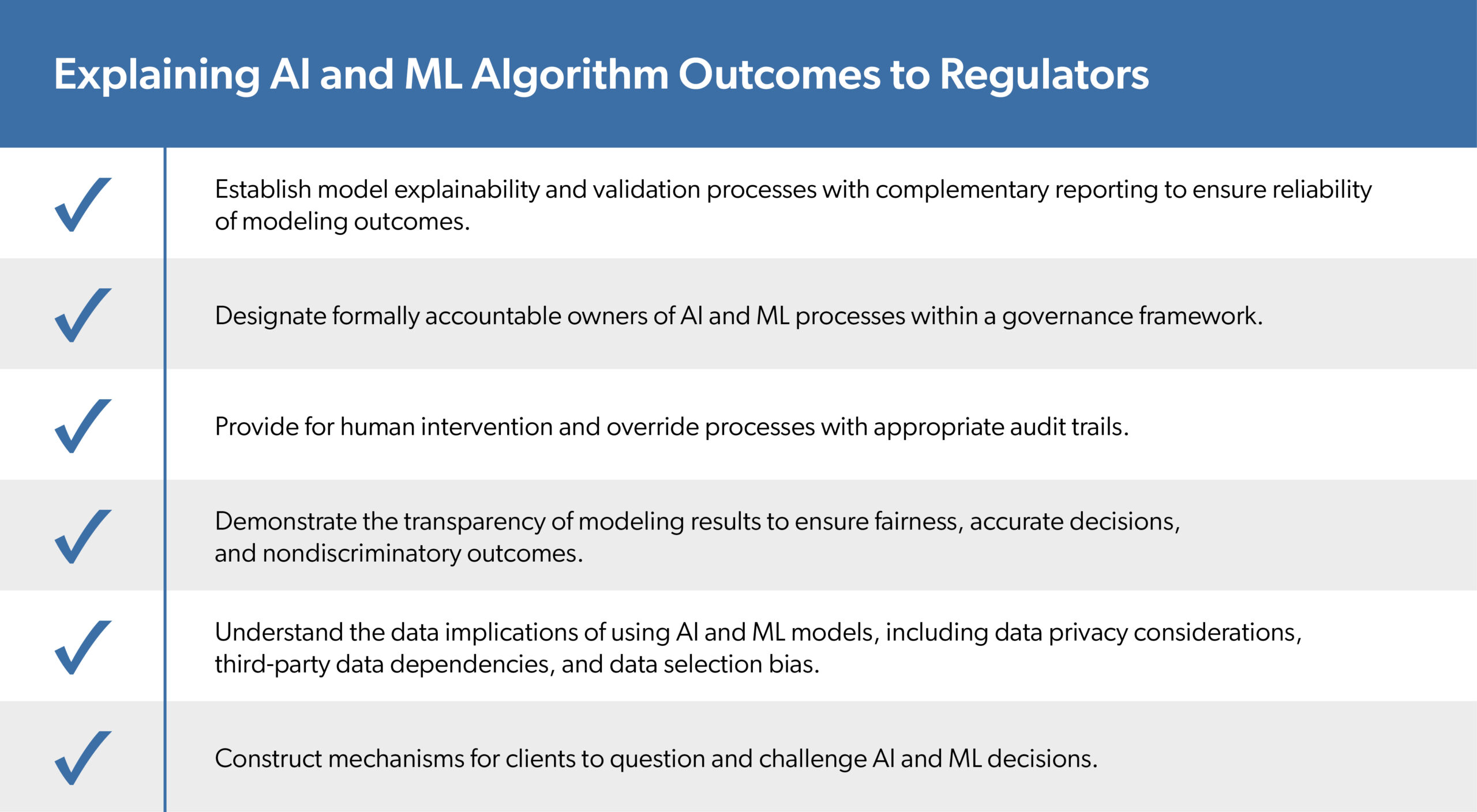

My team identified six best practices to assist FI CIOs and CTOs in designing and overseeing the use of AI/ML-driven models and processes to promote explainability and transparency in adherence with regulatory principles. These are summarized in the table below:

Table A: The Checklist

The checklist in this table, also outlined in my latest report, facilitates IT leaders’ efforts to design AI/ML-based models and processes that both fit within the enterprise’s IT strategy and enable transparency in providing regulators with required information.

Exclusively for members of the Financial Services CIO/CTO Advisory Practice, banking and FI IT leaders can use these practices as a framework to design AI algorithms and AI-driven financial processes that are explainable.

Could it help to explore how these best practices can facilitate your organization’s initiatives to develop AI-based processes that adhere to regulatory requirements? Contact me at [email protected] to start the conversation.